Scientists in Europe and the USA – including a cosmologist from the University of Portsmouth – have begun modelling the universe for the first time using Einstein’s full general theory of relativity.

The teams have independently created two new computer codes they say will lead to the most accurate possible models of the universe and provide new insights into gravity and its effects.

One hundred years since it was developed, Einstein’s theory remains the best theory of gravity, consistently passing high-precision tests in the solar system and successfully predicting phenomena such as gravitational waves, discovered earlier this year.

But because the equations involved are so complex, physicists until now have been forced to simplify the theory when applying it to the universe.

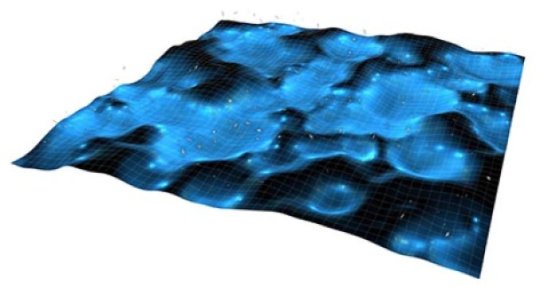

The two new codes are the first to use Einstein’s complete general theory of relativity to account for the effects of the clumping of matter in some regions and the lack of matter in others.

Dr Marco Bruni, of the Institute of Cosmology and Gravitation, Portsmouth, said: “This is a really exciting development that will help cosmologists create the most accurate possible model of the universe.

“Over the next decade we expect a deluge of new data coming from next generation galaxy surveys, which use extremely powerful telescopes and satellites to obtain high-precision measurements of cosmological parameters – an area where ICG researchers play a leading role.

“To match this precision we need theoretical predictions that are not only equally precise, but also accurate at the same level.

“These new computer codes apply general relativity in full and aim precisely at this high level of accuracy, and in future they should become the benchmark for any work that makes simplifying assumptions.”

Work by the two teams – one team from Case Western Reserve University and Kenyon College, Ohio – the other a partnership between Dr Bruni, a reader in cosmology and gravitation, and Eloisa Bentivegna, a senior researcher at the University of Catania, Italy – will be highlighted today as Editors’ Suggestion by Physical Review Letters and Physical Review D and in a Synopsis on the American Physical Society Physics website.

Both groups of physicists were trying to answer the question of whether small-scale structures in the universe produce effects on larger distance scales.

Both found that to be the case; however, they present concrete tests that show a departure from a purely averaged model.

The researchers say computer simulations employing the full power of general relativity are the key to producing more accurate results and perhaps new or deeper understanding.

Professor Glenn Starkman, of the American team, said: “No one has modelled the full complexity of the problem before. These papers are an important step forward, using the full machinery of general relativity to model the universe, without unwarranted assumptions of symmetry or smoothness. The universe doesn’t make these assumptions, neither should we.”

Both groups independently created software applying the Einstein Field Equations, which describe the complicated relationships between the matter content of the universe and the curvature of space and time, at billions of places and times over the history of the universe.

Comparing the outcomes of these new simulations to the outcomes of traditional simplified models, the researchers found that approximations break down.

Dr Bruni said: “Much more work will be needed in future to fully comprehend the importance of the differences between simulations based on Einstein equations and those making simplifying assumptions.

“In the end, as always in physics, it will be the interplay between theory and observations that will further our understanding of the universe.”

Agencies/Canadajournal

Canada Journal – News of the World Articles and videos to bring you the biggest Canadian news stories from across the country every day

Canada Journal – News of the World Articles and videos to bring you the biggest Canadian news stories from across the country every day